Ashleigh Bruton

Through artificial intelligence (AI) online pornographic content now features almost anyone imaginable – even those who never consented.

In a now-removed Facebook ad, a woman with an identical likeness to Emma Watson appears to engage in a sexually suggestive act. She bites on her lip flirtatiously as the camera pans down to a shot of the woman bending down on her knees before us. However, she is not the Harry Potter star that the public has grown to love. She is an anonymous individual who has had Watson’s face superimposed onto theirs.

The ad in question was for “Facemega”, a deepfake app that claimed any user can “swap any face into the video!” with the click of a button. A re-upload of the video took Twitter by storm, gaining the attention of 3.3 million viewers. Many expressed their disgust and concern, questioning the ethical nature of AI-generated pornography and the dangers that arise when this technology falls into the wrong hands.

i got this ad yesterday and wow what the hell pic.twitter.com/smGiR3MfMb

— Lauren Barton (@laurenbarton03) March 6, 2023

What is a “Deepfake”?

A deepfake is digital media, typically in video format, which uses artificial intelligence to replace the facial features of one individual with another. Through machine-learning algorithms deepfakes are hyper-realistic, making it hard for viewers to distinguish if they are real or fake.

Harmless deepfakes do exist, as seen with the airing of the comedy show “Deepfake Neighbour Wars” on ITV. However, deepfake pornography is the most produced and sought-after content of this nature. A 2019 study conducted by Deeptrace uncovered that around 96% of deepfakes online contain sexually explicit content, and almost all of the “participants” are women who have not consented. Western actors like Emma Watson, Scarlett Johansson, and Millie Bobby Brown are among the most well-known faces used in deepfakes.

It is not only musicians and Hollywood stars that are endangered by this advanced technology. Earlier this year, Pokimane, QTCinderella, and SweetAnita expressed outrage when fellow streamer Atrioc accidentally exposed his subscription to a deepfake website that hosts explicit content of other streamers. Some of the faces seen on the website were of his friends and colleagues on Twitch.

I want to scream.

Stop.

Everybody fucking stop. Stop spreading it. Stop advertising it. Stop.

Being seen “naked” against your will should NOT BE A PART OF THIS JOB.Thank you to all the male internet “journalists” reporting on this issue. Fucking losers @HUN2R

— QTCinderella (@qtcinderella) January 30, 2023

The Unsettling Dangers of AI-pornography

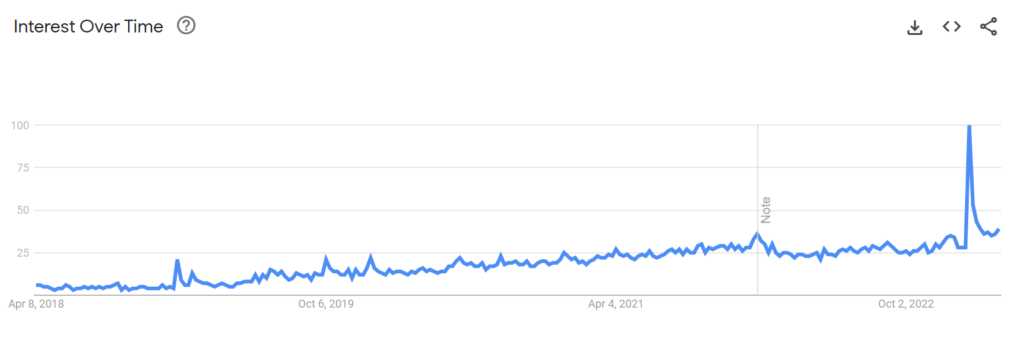

Access to deepfake pornography is now easier than ever. Websites and services that cater to this demand are spread anonymously online through chat forums on Reddit and Discord. Some sites are even discovered through a simple Google search. Simply looking up “deepfake porn” online introduces searchers to a range of websites that host vast catalogues of nonconsensual media, with one infamous website raking in millions of visitors per month. Google search traffic revealed a spike in users searching for this content after Atrioc’s deepfake scandal.

These services not only make image-based sexual abuse widely accessible, but they pride themselves on the ease with which content can be created. With a few clicks, porn videos can be produced starring celebrities who never consented. A quick glance at the most prominent websites within this market reveals that thousands of videos are produced daily, catering to any kink imaginable.

The simplicity of deepfake creation means that anyone, even non-celebrities, is susceptible to their identity being stolen to make pornographic material. A recent NBCNews report uncovered that deepfake creators using Discord to promote these websites also advertised a customisable “personal girl” service. The article shed light on one creator who offered to make content of any woman with a small Instagram following for $65.

What is Being Done to Stop Deepfake?

In November 2022, the UK Government proposed an amendment to the existing Online Safety Bill to help prosecute the creation and distribution of AI-manipulated pornography. However, as of April 2023, there are no laws within the UK that regulate deepfake content. Instead, victims that have been targeted and wish to take legal action have to resort to using existing laws. This includes defamation, harassment, privacy, and data protection.

While these laws exist, bringing this case to court presents its own challenges when identifying the often anonymised creators of these deepfakes. Furthermore, little can be done to prevent the spread of illegal content, and tracking its distribution across multiple platforms becomes increasingly hard once videos go viral.

Featured image courtesy of Mika Baumeister on Unsplash. Image license found here. No changes have been made to this image.